2D Neural Fields with Learned Discontinuities

- 1 University of Toronto

- 2 New York University, Courant Institute of Mathematical Sciences

- 3 Adobe Research, San Francisco

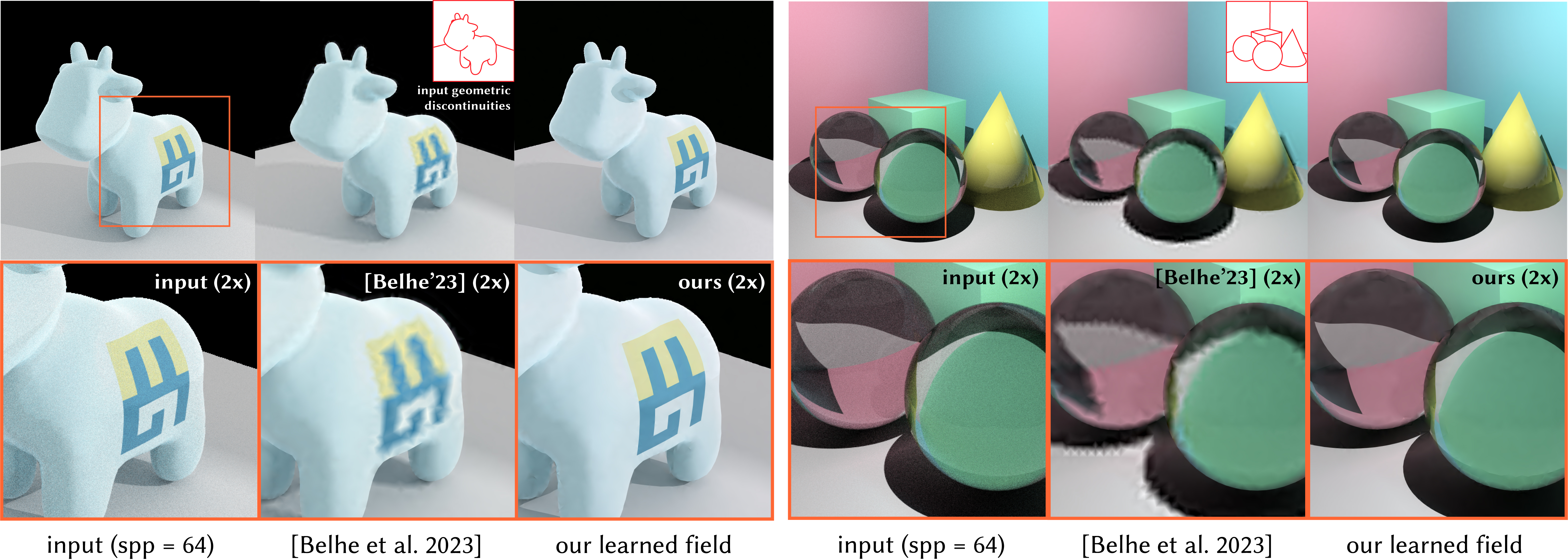

Existing image representations that support discontinuities, such as discontinuity-aware 2D neural field [Belhe et al. 2023], requires accurate 2D discontinuities as input. However, in applications such as denoising 3D renderings, not all types of discontinuities are always available. False negatives caused by sharp texture and refracted geometries lead to blurs. We introduce a novel discontinuous neural field model that jointly approximates the target image and recovers discontinuities.